— From Privacy to the Quiet Expansion of Surveillance Governance

2026 Election Issues Series · Part XI

In democratic societies, freedom has long been understood as a condition of non-interference. As long as individuals act within the law, they are presumed free to choose how they live, move, associate, and express themselves. Government authority, at least in theory, begins only beyond clearly defined boundaries.

This understanding has shaped the modern intuition of liberty: freedom exists inside a visible line, while regulation operates outside it. The expansion of artificial intelligence has not openly abolished this framework. Instead, it is gradually rendering the boundary itself less visible—and in some cases, less meaningful.

When Freedom Is No Longer About What You Do, but How You Are Interpreted

Traditionally, legal systems evaluate freedom through observable actions. Law asks whether a person has done something prohibited, whether a rule has been violated, whether responsibility can be assigned after the fact.

AI alters this sequence. Across many institutional and market contexts, systems increasingly focus not on isolated actions, but on behavioral patterns accumulated over time. Past choices, inferred preferences, and predicted future behavior now carry greater weight than single decisions.

Eligibility for services, access to opportunities, or classification as “low risk” often depends not on what a person has just done, but on how data systems interpret their overall profile.

Under these conditions, freedom no longer resides solely in discrete moments of action. It becomes distributed across a continuously updated data trace—one that individuals rarely see, but institutions routinely consult.

Surveillance Without Watching: Prediction as Governance

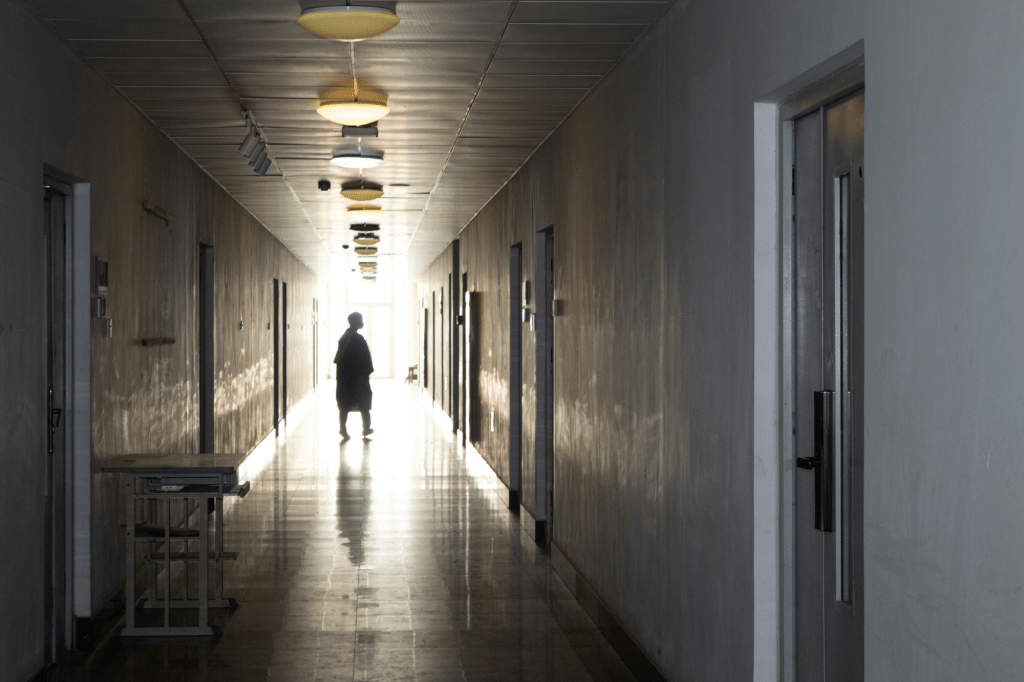

When surveillance is discussed, it is still commonly imagined as direct observation: cameras, monitoring officers, visible enforcement. AI-driven surveillance operates differently.

Systems do not need to watch individuals in real time. They infer. By comparing vast numbers of similar cases, algorithms categorize individuals, anticipate behavior, and assign probabilistic risk long before any action takes place.

The critical shift lies here: governance no longer responds primarily to what people do, but to what systems expect them to do.

Individuals remain formally free to act. Yet the institutional consequences of their actions—approval, delay, scrutiny, denial—are often pre-shaped by predictions made in advance. Freedom is not prohibited; it is pre-structured.

Why Privacy Erodes Through Consent and Convenience

Legal discussions of privacy tend to focus on violation: unauthorized collection, lack of consent, unlawful access. AI-driven governance rarely depends on such clear breaches.

More often, data extraction occurs through mechanisms that are legal, consensual, and framed as beneficial. Efficiency, safety, personalization, and risk management provide persuasive justifications for ever-expanding data collection.

Over time, privacy is not so much taken away as redefined. It becomes a negotiable asset—something individuals are encouraged to exchange for convenience or access. The boundary of freedom retreats quietly, not through coercion, but through normalization.

From Rights Protection to Behavior Management

The deeper transformation brought by AI is not the erosion of a single right, but a shift in the logic of governance itself.

Traditional governance intervenes after actions occur. Responsibility is assessed retrospectively; sanctions follow violations.

AI-enabled governance increasingly operates prospectively. Risk scores, predictive models, and compliance algorithms seek to manage behavior before deviation occurs.

In such systems, freedom is no longer the default condition. It becomes something that must fit within model expectations—permitted not because it is lawful, but because it is statistically acceptable.

When Boundaries Move from Law to Algorithms

This is the most consequential development of all. Legal boundaries, however imperfect, are visible, contestable, and theoretically subject to democratic challenge. Algorithmic boundaries are often opaque, proprietary, and resistant to explanation.

The critical issue is not whether AI should be used, but who defines its operation, who understands its consequences, and who possesses the authority to question its outcomes.

As the limits of acceptable behavior migrate from legal texts to technical systems, traditional mechanisms of accountability strain to keep pace.

Is Freedom Still the Starting Point?

Artificial intelligence has not declared the end of freedom. What it is doing instead is altering the conditions under which freedom exists.

When behavior is anticipated, choices are nudged, and risks are flagged in advance, a fundamental question emerges: Is freedom still the starting assumption of governance, or has it become a variable to be managed?

This question extends beyond technology and privacy. It speaks to the future character of democratic governance in an age where prediction increasingly precedes judgment.

By Voice in Between

Discover more from 华人语界|Chinese Voices

Subscribe to get the latest posts sent to your email.